Changing the Climate of Fear and Deception

"Gonna get my Ph.D. I’m a teenage lobotomy." -Teenage Lobotomy by The Ramones

Among Christians the word “parable” is often associated with the teachings of Jesus in the Gospel, but the word and its principle can also stand on its own. The idea behind a parable is simple – teaching by example often leads to clearer understanding and better decision-making. So even though the focus of this article is how to respond to the incessant calls that we must “take action to address climate change,” this article begins with the guidance of a parable on a different subject.

*** The Parable of The Good Doctor ***

Imagine a scenario in dystopian society which begins as follows. A doctor is visited by a patient who says “I have a headache.” Now this may not appear to be a vexing problem to us, but in this made-up dystopian society, the problem is dreadfully complex.

Unfortunately, in this imagined society, a small group of people called the Ruling Elite have overtaken control of the government. Their philosophy is guided by an adaptation of the teachings of Friedrich Nietzsche, who authored several texts including “Beyond Good and Evil” and “On the Genealogy of Morality”. For those unfamiliar with Nietzsche, a lecture by Professor Michael Sugrue, available at (

) serves as a captivating introduction. To orient the reader to what is meant by the term “Nietzschean Philosophy”, consider the following excerpts of Professor Sugrue’s lecture.

*** “Nietzsche wants to provoke us to a sort of Atheistic heroism which replaces religion with art.” – Prof. Michael Sugrue ***

Prof. Sugrue states: “Nietzsche viewed himself as the end of the Western intellectual tradition… He called himself the Anti-Christ more than once, and he wrote a book called ’The Anti-Christ’. And what he hoped to do was to get beyond Christianity. He quite literally thought that he was going to supplant Jesus in the Western Tradition, which is quite a tall order... He wanted to offer a new set of values. He wished to offer a new cultural orientation to the West, or perhaps to revive an old cultural orientation in the West. And he wished to make us confront the half-truths, the lies, the evasions, that are characteristic of almost everyone’s life and thought…”

“Nietzsche wishes to offer a criticism of Christianity and the values it represents and, in addition, to supplant that with a new code of morals. Nietzsche asks an important question – a question that everyone living in this modern age, this One-World, Anti-Metaphysical Age has to confront -which is: Where does Christianity and its moral values come from? How is it that human beings, conceived of as animals, as featherless bipeds, how is it that they come to have a thing called a conscience? How is it that they make judgments of Good and Evil, which is not characteristic of fish or birds or reptiles? What is it about human beings which causes them to construct moral systems which are in some respects the antithesis of Nature? Why is it that we feel the desire to conflict with Nature and make judgments that Nature never does?

“Nietzsche thinks that he finds the answer in the psyche of the human individual, and he thinks that we are very peculiar animals – animals with strange attributes, which are natural and which are part of the human condition. But in fact, Nietzsche says that most of our elevation of ourselves beyond Nature, beyond simple facticity, is a kind of spurious raising of ourselves outside and above Nature... Nietzsche wants us to confront the fact that we are animals, that we are part of Nature, and that our moral judgments are in fact part of that natural process. In looking at Christianity, Nietzsche goes back to the actual history of Christianity and asks: What is it that Christian morals represent? Whose interests do they serve? And why are these interests disguised? What is it about the moral judgments characteristic of Christianity that needs to be disguised? What is it, in other words, that is a lie in Christina Morals? A remarkable question. Nietzsche’s answer is that there are two kinds of morality, and Christianity only represents one particular perspective on judgments of Good and Evil, and this perspective is the perspective of the Herd.

“Nietzsche contrasts two kinds of morals: Herd Morals-morals that are characteristic of the weak, the feeble, the inferior, the enslaved- which is to say Christianity- and Master Morals -the morals of Warriors, the predatory human beings who make judgments on the basis of their strength rather than their weakness. Nietzsche wishes to supplant the Herd Morals, characteristic of Christianity and characteristic of Western Culture for the previous two thousand years, with a new (set of) morals, morals which harken back to the age of the Homeric Hero, which harken back to the Aristocratic Warrior Elite, which is found all over the world, which creates values out of nothing and which glories in the fact that their judgments can be enforced in this world independent of any metaphysical construct.”

“Nietzsche asks: Who was it that created Christianity? Well, it is an outgrowth of Judaism, and Judaism is a priestly religion. Christianity is an extension of that, and Christianity in its earliest historical phases appeals to the Slave Class in Rome. In fact, Christianity is the revolt of the oppressed masses in Rome. Nietzsche argues that Christianity and its myths was organized so as to articulate the perspective of the Slaves, of the inferior, of the feeble. And it was a way of having revenge upon Masters, having revenge upon Warriors, having revenge upon those who could oppress them and turning the tables. By offering a scale of values that was different from and independent of that of the Master Class, Nietzsche says that the Slaves have their revenge. And the triumph of Christianity in the West amounts to the triumph of the Slave, of the inferior, of the weak and impoverished.”

A second video lecture by Prof. Michael Sugrue expands upon Nietzsche’s ideas on the broader topic of Western philosophy. (

): One excerpt near the 8:30 mark is as follows:

“Nietzsche hated Socrates. He had great respect for Socrates. He said ‘Finally, someone worth talking about and worth talking to.’ The problem with Socrates is, in many ways, the same as the problem with Jesus. The problem with both men is that they generated a metaphysics which led the tradition of Western culture out of the world of space and time. Socrates, in The Republic, invents the world of the forms – where we keep perfect beauty and perfect truth. Jesus has heaven, where we keep God and the Holy Spirit, and the saints and the martyrs, and all that. Nietzsche says, ‘In both cases, the problem here is not the particular dogma that is involved but rather the whole idea of metaphysics. He says, ‘I have an idea. The world we’re in? This is the world! And there is no other world to be in. So let’s not talk about metaphysics of the Greek kind or of the Christian kind any more. Instead, we should adopt a new position. We will love the Earth. We will find what is best in this world, and we will do what we can to improve and perfect human possibilities here and now.”

One can see how these final few statements, might have some appeal. However, its interpretation in the 20th century is a subject of debate, as Prof. Sugrue describes at a later point in the initial referenced video lecture:

“Many of Nietzsche’s friends and his philosophical admirers have tried to shield Nietzsche from the criticism that he is a proto-Nazi. And in fact, I don’t think it works. I think in fact Nietzsche is a proto-Nazi. There is a strong tinge of racism in his work. There is a strong tinge of refined cruelty in his work. And insofar as this was misused in the 20th century by the Nazis and by other racially-oriented hate groups, I think Nietzsche is at least in part to blame. Those who like Nietzsche’s work and want to shield him from this criticism will point out with considerable justification that he would have nothing but contempt for the misuse of his work in this century. But it would not entirely absolve him of the results of his statements because after all, Nietzsche would have had contempt for everybody, whether they adopt his position or not.”

***

In our fictitious society, the Ruling Elite fear that the Herd might become more intelligent than they are. If that were to happen, their entitlement to rule over the Herd might be compromised. So the Ruling Elite assemble a plan to reduce the intelligence of the Herd. They have prolifically paid their government scientists to perform fake studies and write fraudulent articles which proclaim that headaches are very dangerous and lethal. Furthermore, they form a panel which dictates that the only approved way to deal with a headache is to give the patient a frontal lobotomy. Of course, a *full* frontal lobotomy would lead to *obvious* problems, so the procedure removes a very small portion of the brain, varying from patient to patient. The Nietzschean government also controls the regulators who approve the procedure and describe the frontal lobotomy operation as the “safe and effective” method to cure a headache. This comprises one step by the Ruling Elites toward establishing a climate of fear and deception regarding headaches in order to convince the Herd into having a small portion of their brains removed.

The same Nietzschean government subsidizes the health insurance companies which advocate for a “safe and effective frontal lobotomy” and refuse to pay for any other headache treatment. Moreover, the Nietzschean government in our make-believe society ensures that every doctor who advocates for frontal lobotomies is given a generous commission for every procedure performed.

The population in our imaginary dystopian society has been conditioned to believe that frontal lobotomies are the ideal cure for headaches because a hefty percentage of the profits are directed to each media outlet. This might seems implausible to many of us in the real world, but in our unfortunate imaginary dystopian society, the most influential media outlets have been purchased by the same Nietzschean group that controls the government. So, these media outlets repeatedly present news stories of people with headaches and glorify the frontal lobotomy as a godsend to those who suffer. Reporters for local media affiliates are assigned the job of tracking down individuals with brain cancer and interviewing them by asking, “Did you ever have a headache before you were diagnosed?” When they get an affirmative reply from the unfortunate brain cancer victim, it is broadcast with the insinuation that headaches can lead to brain cancer and death if left untreated by frontal lobotomy.

Of course, the outcome of a frontal lobotomy is far worse than the headache, but the media does not report on those suffering individuals because it would disrupt their business model. Most of the population is conditioned to believe that headaches can get so terrible that if a “few people suffer from the side effects” of a frontal lobotomy, it is probably worth the trade-off. The owners of these media conglomerates reap massive advertising revenue during their news broadcasts.

The entertainment industry in our make-believe society is also controlled by the Nietzscheans. In fact, musical performances celebrate the joy brought about by the frontal lobotomy as frenzied crowds dance wildly. (Figure from the movie - “Rock and Roll High School”)

The Nietzschean group in our imaginary society acts with a very clever approach, called a Hegelian Deception, to support the notion that a frontal lobotomy is the best way to cure a headache. Counter-intuitively, it has hired doctors to proclaim that there is no such thing as a real headache. They go onto media outlets and express staunch opposition to the government position, but in fact these doctors are actually controlled by the same Nietzschean group which supports the frontal lobotomies as a cure for a headache. These “controlled opposition” doctors say that every individual who thinks they have a headache should do absolutely nothing because the perceived headache is not real but is instead a product of media persuasion. This statement is often, but not always, correct.

Some of the controlled-opposition doctors then go on to make unsupportable and outrageous claims. For example, they announce that certain influential celebrities, government officials, and media personalities are not really human beings but are from the planet Pluto. They suggest that their true appearance resembles that of a savage, enormous platypus with large teeth and that they eat puppies to survive on Earth. The controlled-opposition doctors suggest these strange beings are camouflaged to appear human. The controlled-opposition doctors make these absurd and obviously false claims in order to discredit everyone who oppose frontal lobotomies as a treatment for headaches. They are labeled “anti-cure” by the (also controlled) media. Anyone who suggests that a frontal lobotomy should not be performed on a person with a headache is also labeled “anti-cure.” The controlled media then warns that any “anti-cure” dissenters are likely to be spouting the dangerous rumor that political leaders, celebrities, and news media personalities are actually a posse of puppy-eating Plutonian platypuses personified. If this proposition sounds preposterous, that is the point.

Other “Controlled Opposition” doctors make claims that seem more reasonable but which are known to be false. Their narrative attracts sincere people, who are later negated and embarrassed when the truth is revealed. One outcome is that many people remain quietly on the sidelines of debate out of fear of being ostracized later. A second outcome is that when well-intending analysts make an error or overreach in a sincere analysis, it is difficult for an independent observer to discern whether the overreaching statement was made because the analyst is “controlled opposition” or whether it was simply an honest mistake. Taken together, these two sets of controlled opposition figures work to discourage debate among dissenters and to discredit (or negate) those who seek to stop the harmful, Nietzschean plot.

Unfortunately, in our imaginary dystopian society, there are three reasons why this Hegelian Deception technique is highly effective with the overwhelming majority of doctors, whom I describe as the Timid Doctors. First, these Timid Doctors fear retribution by the Nitzschean government, which has the power to destroy a career by revoking the license to practice medicine and to label them “anti-cure”. Second, a Timid Doctor who goes along with the frontal lobotomy to remedy a headache is amply rewarded monetarily and within the dystopian society by those who support the Lobotomy Agenda. Third, the Timid Doctor rationalizes that it is not his or her job to approve medical recommendations, and so any blame should be transferred to the government, even though these timid doctors know that a frontal lobotomy is an inappropriate treatment for a headache. In other words, they are “just following orders”.

Fortunately, there also exists a group of courageous doctors, denoted “Good Doctors”, in our imaginary dystopian society who are focused only on what is the best care for the patient. At last, we come to the conundrum of our parable: How should the Good Doctor respond when a patient says, “I have a headache”?

The Good Doctor must confront the possibility, even if remote, that the patient might actually have a headache. As such, one problem faced by the Good Doctor is that a headache cannot truly be measured in a reliable fashion. Consider that if a doctor had a Headache-Meter which could detect whether or not a patient *really* has a headache, the Good Doctor’s decision would be well-informed. Unfortunately, no such Headache-Meter exists, even in our imaginary society. A headache is a subjective sensation that only the patient can experience and assess.

It might be tempting for the Good Doctor to express to the patient his/her disgust and frustration that the government is promoting the Lobotomy Agenda as a cure for headaches and to disclose that there is a strong likelihood that all the talk about headaches has actually led the patient to simply *believe* he/she has a headache. However, this approach carries risk. Some headaches are real- and if that is the case, the Good Doctor might be discredited as uncaring by the patient. Even worse, the Good Doctor might end up being labeled an “anti-cure” activist if a truly suffering patient reports the experience to the controlled media.

I contend that the best choice for the Good Doctor is to recommend to the patient with a different option which has three desirable qualities. First, the option should do no harm. Second, the option should provide the patient with optimism that he or she might feel better as a result. And third, the option should be an inexpensive approach because the imaginary Nietzschean government and its controlled insurance companies will not assist in paying for anything other than a frontal lobotomy.

In my opinion, the Good Doctor should recommend to the patient: “Take two aspirin and call me in the morning.”

Naturally, once this approach is taken by enough Good Doctors, it would be criticized by both sides of the Hegelian Deception. Those who promote the Lobotomy Agenda would protest that aspirin will not be nearly as effective as the frontal lobotomy, which is the only government-approved way to treat a headache. In this fictitious setting, the Nietzschean Ruling Elite might even attempt to subdue the masses by proclaiming aspirin to be “dangerous”, despite the fact that low doses of aspirin have been used for treatment. Meanwhile, the doctors acting as controlled opposition promoting the “there is no such thing as a headache” concept will proclaim that it is a waste of two perfectly good aspirin. However, this criticism by both camps is unpersuasive to the Good Doctor.

By prescribing two aspirin, the Good Doctor gives the patient peace of mind. The two aspirin are tangible objects. You can count them: one, two. Do two aspirin truly cure a headache? It is difficult to say definitively since a headache cannot be measured. However, there are some known properties of aspirin which may provide a benefit, including headache relief. There is a chance that by taking them, the patient may feel relieved in knowing that a proactive step has been taken. In other words, the combination of time and positive thinking effect may lead to a benefit beyond any biochemical response brought about by the aspirin itself.

This approach may leave the purist dissatisfied. After all, the action of the Good Doctor did nothing to solve the overarching dilemma that the Nietzschean government is promoting frontal lobotomies as a cure for a headache. Moreover, we are left with the strong likelihood that either the patient never really had a headache in the first place or that time alone might have led the patient to feel better by morning. A pragmatist would counter simply: “It does not matter.” The two aspirin might provide a net overall benefit to the patient with assurance that no harm would be done. It certainly beats a frontal lobotomy. Furthermore, an optimist might suggest that buying time for individual patients will yield a benefit as the mass of humanity will eventually come to understand that a Nietzschean government which intends to harm the Herd must be fundamentally changed.

It is important to emphasize that although the two-aspirin option is, in my view, clearly the best option, it is not 100-percent guaranteed to work. There are some edge cases which demonstrate its imperfections. For instance, there is, perhaps, a one-in-a-million chance the headache is a symptom of brain cancer. In such an instance, the two-aspirin approach would not cure the cancer, and the patient would subsequently have to be given a higher level of care, including a detection approach (i.e., a measurement) via medical imaging might be performed. A second possibility is that the patient may be so influenced by the incessant media depiction of headaches that absolutely nothing can be done to convince him/her that two aspirin might work. In this second instance, the Good Doctor might have to use more complex psychology to persuade the patient to avoid the frontal lobotomy.

These imperfections notwithstanding, I assert that the Good Doctor’s advice of “Take two aspirin and call me in the morning,” would be the best way to change the climate of fear and deception- one patient at a time- in this imaginary dystopian society.

**** “The only thing we have to fear… is fear itself.” -Franklin D. Roosevelt (also “Cult of Personality” by Living Colour) ***

Turning our attention to real-world matters, the topic described under the umbrella of “Climate Change” is a daily focus of the news media in Western societies. Any news stories about relatively common problems (such as droughts, hurricanes, forest fires,… and hot weather in Arizona in July) are associated with the idea that human use of inexpensive traditional energy sources leads to an increase in carbon dioxide in the atmosphere. The media generally suggest that “science” has determined that an increase in carbon dioxide causes worldwide temperatures to rise, which in turn leads to the disastrous outcome which are the subject of the news story. Even when the news is about record cold temperatures, the news media is known to make oxymoronic statements like this: “In fact, paradoxically, a warmer climate may have actually contributed to the extreme cold.”

( https://www.cbsnews.com/news/climate-change-texas-winter-storms-arctic-cold/ )

When the citizens in Western societies are polled about their concerns the most significant potential outcome of climate change, ocean level rise is always either at or near the top of the list. However, measuring any change in sea level to within centimeters (or millimeters) is an exceptionally daunting task, perhaps analogous to measuring whether a person has a headache.

Consider these familiar examples of what occurs on different time scales to affect the water level by at least one meter:

1. Water waves affect water height and have a cycle time of a few seconds.

2. Tides affect water height with a cycle time of about 12 hours.

3. Weather systems affect water height with a cycle time of days.

Despite these challenges in the measurements, media reports describe the prospect of an increase in global mean sea level (GMSL) in both dire and certain terms. One example is given by “25 Years of Satellite Data Uncover Alarming Error in Sea Level Measurements: Climate change is worsening faster than we thought,” by Peter Hess, published 12 Feb 2018.

“The Earth is changing in ways that could cause an actual mass extinction during our lifetimes. In recent years, scientists have made it abundantly clear that humans are driving climate change, but what they’ve only recently found out is how quickly we’re making the Earth more inhospitable. In a new study published Monday in Proceedings of the National Academy of Sciences, they report that the rate at which the climate is getting worse is actually increasing each year.

The first article is https://www.pnas.org/doi/full/10.1073/pnas.1717312115 (R.S. Nerem et al., with the corresponding author being B.D. Beckley.

[This paper’s abstract is provided for reference section in the media report:

Using a 25-y time series of precision satellite altimeter data from TOPEX/Poseidon, Jason-1, Jason-2, and Jason-3, we estimate the climate-change–driven acceleration of global mean sea level over the last 25 y to be 0.084 ± 0.025 mm/y2. Coupled with the average climate-change–driven rate of sea level rise over these same 25 y of 2.9 mm/y, simple extrapolation of the quadratic implies global mean sea level could rise 65 ± 12 cm by 2100 compared with 2005, roughly in agreement with the Intergovernmental Panel on Climate Change (IPCC) 5th Assessment Report (AR5) model projections.]

and https://www.pnas.org/doi/full/10.1073/pnas.1616007114 (S. Dangendorf et al.)

This could worsen coastal flooding, which was already predicted to get more severe when scientists predicted it at previous sea level measurements.

The new PNAS study comes hot on the heels of another study, published in December 2017, which also shows evidence that scientists have underestimated sea level rise. In that study, researchers say satellite altimetry is insufficient for measuring increased water volume because the seafloor is also sinking.

With these two papers, the case is becoming clearer that climate change research is constantly being refined and corrected, and as that happens, the picture becomes increasingly dire. Let’s see what we find out next.

Previous calculations were off because of a combination of miscalibrated instruments and unique environmental factors, write the researchers. For example, the satellite TOPEX/Poseidon was launched in 1992 to measure ocean topography, but the year before that, Mount Pinatubo erupted in the Philippines. These seemingly unconnected events actually interacted in a strange way. That eruption caused a modest decrease in global mean sea level, which made the first years of TOPEX/Poseidon’s measurements show a deceleration in sea level rise.

The next 25 years combined minor calibration errors in tide gauges and other satellite altimetry equipment, which ultimately averaged out to show a misleadingly steady increase. As part of the new study, the researchers corrected for this statistical noise, showing that the rate of sea level rise actually slowly accelerated over this time period.

A 0.08-millimeter annual rise* sounds small, but over many years it will add up to a big difference. By these calculations, global sea levels could rise by up to 77 centimeters by the year 2100.”

(*Note: The phrase “0.08-millimeter rise” is intended to communicate a 0.08 millimeter-per-year-squared acceleration.)

***

The article refers to “TOPEX/Poseidon” which is the initial satellite used for altimetry. Later missions includ Jason-1, Jason-2, and Jason-3. A natural question is, “What instruments are required in order to measure the global mean sea level in order to tell how and whether it is changing?” We might start with one which enables us to collect a time series of data at a single location in the ocean and comparing the water height to a single point on land by averaging the data over time. This is the idea behind the ‘tide gauge’ described in the media report. It is tempting to think that, by replicating the process with thousands of tide gauges, one might be able to compute an average and discern a global mean sea level with relative ease. But measuring any sea level change at a single point is extremely challenging because the impact of wave height, tides, and weather patterns can change sea level on the order of meters within seconds, hours, and days, respectively. By contrast, those who suggest there is a change in global sea level describe it as occurring at a rate of millimeters per year. Therefore, it is challenging to collect data with sufficient precision to detect the sea level change at even a single point.

Yet there is another difficulty in collecting sea level changes with a tide gauge which can plays a eve larger role. Because the length of time required to compute an average value is so high (years or even decades), one must consider that the elevation of the reference point on land may change by millimeters or centimeters at about the same rate as a possible sea level change. Without a sufficiently stationary reference point on land, the measurement of sea level at a single point may be influenced by bias.

Scientists often attempt to remove bias by ‘conditioning’ the data. As an example, suppose you turn on a digital scale and that it properly indicates zero pounds. You step on the scale and read the number on the display. But then suppose that, after you step off the scale, it erroneously reads 1.0 pound. Would you subtract 1.0 pound from the reading, or would you instead simply go with the unaltered readout? A logical solution to this scale example might be to start over and get a second reading after getting on the scale again, but this would be impossible for the tide gauge problem because one would have to go back in time to resample the data, which is impossible. Perhaps the most objective compromise might be to split the difference and subtract 0.5 pounds from your indicated weight. If you do make an adjustment to the readout, you have performed a sort of ‘data conditioning’ to remove a bias. This example also illustrates the temptation one might have to choose a model of data conditioning which leads to a preferred outcome of the measurement.

***

In September 2022, the World Economic Forum (WEF) published an article on their website titled (perhaps tellingly), “Sea Level Rise: Everything You Need to Know.” This article also provides a description of tide gauges, and then proceeds to describe the newer satellite altimetry technique.

https://www.weforum.org/agenda/2022/09/rising-sea-levels-global-threat/

“How is sea level measured?

Sea level is the measurement of the sea’s surface height. Between the 1800s and early 1990s, tide gauges attached to structures such as piers measured global sea level, as research organization the Smithsonian Institution explains. Now satellites carry out this task by bouncing radar signals off the ocean’s surface.

Because local weather conditions and other factors can affect sea level, measurements are taken globally and then averaged out.

How much are sea levels rising?

Sea levels reached a record high in 2021, and NASA says sea levels are rising at unprecedented rates in the past 2,500 years.

The US space agency and other US government agencies warned this year that levels along the country’s coastlines could rise by another 25-30 centimetres (cm) by 2050. This 30-year increase would match the total sea level rise over the past 100 years.

The global sea level has risen by about 21cm since records began in 1880. While measuring in centimetres or even millimetres might seem small, these rises can have big consequences…

There are also likely negative feedback loops that could speed up glacier ice melt, which were not previously accounted for, explains Elena Perez, Environmental Resilience Lead at the World Economic Forum. For example, the Thwaites Glacier in Antarctica is disintegrating more quickly than anticipated. It’s been nicknamed the ‘doomsday glacier’ because without it and its supporting ice shelves, sea levels could rise more than 3-10 feet.”

***

This World Economic Forum (WEF) article presents the plot shown below. The black line representing what the WEF describes as “tide gauge data” is jagged and non-uniform while it mildly increases overall from 1900 to about 2020. The orange line representing “satellite data” starts in the early 1990’s rather than 1900. By contrast, the line for the “satellite data” indicates a fairly smooth and regular increase. Upon close inspection- it also curves slightly upward with time.

Importantly, neither time series shows error bars, which are commonly used to communicate the level of uncertainty in the data. The less jagged line for the satellite data, combined with a lack of error bars, are likely to lead the reader to conclude that the satellite data is more precise than the tide gauge data. Since the satellite data are represented as having a consistent pattern, the article implies that an extrapolation of the curve should be trusted as a predictor for future values of sea level rise, even if extrapolated over 30 years to 2050, or even 80 years to 2100.

But does this graph from the World Economic Forum article depict the tide gauge data and the satellite data appropriately?

***

The authors of “Reassessment of 20th century global mean sea level rise” (which is referenced in the media report as Dangendorf et al., https://www.pnas.org/doi/full/10.1073/pnas.1616007114 ) describe challenges posed by tide gauges:

“These tide gauges are grounded on land, and are thus affected by the vertical motion of the Earth’s crust, caused both by natural processes [e.g., glacial isostatic adjustment (GIA) after the last deglaciation or tectonic deformations] and by anthropogenic activities (e.g., groundwater depletion and dam building). As pointwise measurements, tide gauges further track local sea levels, which reflect the geographical patterns induced by ocean dynamics and geoid changes in response to mass load redistribution (e.g., due to the changes in the Earth’s unique 20th century Global Mean Sea Level (GSML) reconstruction.”

This article presents a figure (Figure S6, shown below) of a time series of six tide gauges around the Earth:

Its caption reads: “Virtual stations for the six oceanic regions based on different stacking approaches and corrections. Shown are the individual tide gauge records corrected for VLM, TWS, ice melting, and GIA geoid (gray) or just GIA (light red) and their respective virtual stations based on two different stacking approaches: averaging after removing a common mean, and stacking first differences (also known as rate stacking; see legend for a description of the line colors). Both approaches are used to overcome the problem of an unknown reference datum of individual tide gauges. Also provided for each region are the medians of linear correlations between the virtual stations and individual tide gauge records from both approaches (black and blue R values).”

In other words, the raw data sets from the tide gauges are not shown here. The red line represents the model with the least amount of “data conditioning,” but even it relies on a model for how land responds when glaciers change. Generally, if glaciers melt, the land mass below springs upward slightly. A glance at the red line subfigure labeled ‘(3) East Pacific’ suggests that the sea level measured by the tide gauge and with conditioning to remove GIA (but nothing else) apparently decreased by about 100 mm between 1950 and 2010. It would be interesting to see the plots of data for the six tide gauges prior to the removal of the (alleged) GIA bias. In particular, what might the original data reveal for the “(5) Subpolar Atlantic” gauge, which appears to have the smoothest increase, without the data conditioning for the GIA bias?

Even if one includes conditioned data, it is clear from these six plots alone that there is substantial deviation in the tide gauge data. Surely, error bars would be appropriate to include in the representation of tide gauge data.

*** What about the satellite data? ***

An official NASA website (https://sealevel.nasa.gov/faq/21/which-are-more-accurate-in-measuring-sea-level-rise-tide-gauges-or-satellites ) describes the advantages of an ideal satellite-based altimetry system and contrasts the approach to measurements by tide gauges.

The language on this official NASA website signifies an unconscious bias of expectations of a sea-level rise, as opposed to either a decrease in or the lack of change in sea level, even though the tide gauges shown in Fig S6 clearly show that both rise and fall may occur. Neutral language would be “possible global mean sea level change”, which leaves open the possibility of a drop in sea level or no change whatsoever as outcomes which might also be measured by satellite altimeters. When scientists express an expectation for results to occur, it can influence the outcomes when choices about data conditioning are made. For example, an approach to data conditioning which leads to an “expected” outcome is likely to be given less critical scrutiny than an approach which leads to an “unexpected” outcome. This is sometimes called “confirmation bias”. I use bold font to illustrate examples:

“Which are more accurate in measuring sea-level rise: tide gauges or satellites?

Both methods produce accurate results, though they measure different aspects of sea-level rise. Both show, for example, that sea-level rise is accelerating. But tide gauges provide direct observations, over 75 years or more, for specific, sparse points on Earth’s surface.

Satellites cannot yet match this long-term record; the altimetry record so far is just shy of 30 years. But satellites provide something tide gauges can’t: nearly global coverage, more thoroughly recording planet-wide trends and explaining the changes behind them.

Satellite altimeters can measure the height of the ocean from space to centimeter or millimeter accuracy when averaged over the globe. Both measurement methods capture regional trends in sea-level rise, and tide gauges also can provide an approximation of global trends, helping to calibrate satellite measurements.”

***

There is an order of magnitude difference between centimeter accuracy and millimeter accuracy, so this statement is vague. For example, if one examines the data from the graph given by the WEF and accepts a 1 cm = 10 mm level of accuracy in the data, the “acceleration of sea-level rise” might be crudely evaluated by varying the initial data point, a point in the middle of the series, and the ending point by 10 mm. A depiction of this thought experiment is given below using a zoomed-in view of the graph. I have added blue dashed lines below and above the orange line in order to representing a +/- 10 mm uncertainty range in the satellite altimetry data. Three red circles have been added to indicate points near the uncertainty limits used in this example, and red dotted line segments connect the red circles.

Since the error could be 10 mm in either direction, the point for 1992 presented as about 124 mm on the y-axis might be 10 mm lower and therefore correspond to 114 mm. Now let’s select a point on the Satellite Data curve near the middle of the time series. For the year 2005, the y-axis value reads about 158 mm; but with an error of 10 mm, its true value might be 10 mm higher at 168 mm. Now let’s consider the point at the end of the series where the year is about 2018, and the sea level on the y-axis is about 203 mm. With an error of 10 mm, the true value might be 10 mm lower and thus equal to 193 mm.

With these three data points one can compute a slope of the line between each of the two line segment generated by including the maximum allowable error in the measurement (based on 10 mm accuracy). The slope of the line segment from 1992 to 2005 is (168 mm – 114 mm)/13 years = 4.2 mm/yr. The slope of the line segment for the time period from 2005 to 2018 would be (193 mm – 168 mm) / 13 years = 1.9 mm/yr. This example demonstrates that a 1 cm (10 mm) error in the data could lead to the opposite conclusion for the satellite data- there might be a very significant deceleration, rather than an acceleration in the (alleged) sea-level rise. This can be seen with the representation by the slopes of the two lines.

If the process were repeated using only 1 mm as the error, the results are quite different. Repeating the steps while using 1 mm in place of 10 mm in the exercise above leads to slopes of (159 mm – 123 mm) 13 years = 2.8 mm/yr from 1992 to 2005 while the slope from the slope from 2005 to 2018 would be (202 mm – 159 mm)/13 years = 3.3 mm/yr. Indeed, if a 1.0 mm limit to the error were correct, one could the data from this example to justify that an acceleration in the (alleged) sea-level rise is occurring.

The statement on the NASA website “Satellite altimeters can measure the height of the ocean from space to centimeter or millimeter accuracy when averaged…” might also be understood to mean that a value between 1 mm and 10 mm is the appropriate value for the error. One can see that the ambiguity in the actual value might lead to opposite conclusions. Since the most calamitous predictions of sea-level rise are based on extrapolations of a second-order polynomial fit to the data, it is important to understand the true level of error – in terms of both precision and bias- in the satellite altimetry data. So let us inspect the methods used to determine sea-level via satellite altimetry.

***

The process used to collect data via satellite altimetry is much more complex than that for tide gauges.

A helpful pictorial depiction of the integrated system of measurement devices are given the figure below (credit:

As the above image suggests, several sophisticated instruments are required. The organization EUMETSAT “is the European operational satellite agency for monitoring weather, climate and the environment from space. In addition, it is a partner in the cooperative sea level monitoring Jason missions (Jason-3 and Jason-CS/Sentinel-6) involving Europe and the United States.” The organization’s website ( https://www.eumetsat.int/jason-3-instruments ) provides a description of the different measurement systems:

(1) “The Poseidon-3B (supplied by Europe), is the mission's main instrument, derived from the altimeters on Jason-1 and Jason-2. It allows measurement of the range (the distance from the satellite to the Earth's surface), wave height and wind speed.”

The altimeter operates by sending out a short pulse of radiation and measuring the time required for the pulse to return from the sea surface. This measurement, called the altimeter range, gives the distance between the instrument and the sea surface, provided that the velocity of the propagation of the pulse and the precise arrival time are known.

However, the time-of-travel of the electromagnetic wave pulse is affected by atmospheric conditions. Therefore the satellite includes a device which provides “a measurement needed for correcting the data for atmospheric content, which affects time of travel of the electromagnetic wave emanating from the altimeter.” This device is described as an Advanced Microwave Radiometer.

If the altitude of a satellite in orbit were somehow held constant, the combination of the two devices above would yield a measurement of sea level change. But even in this idealized scenario, there could be difficulties. The altimetry satellites are stationed 1,380 km (about 840 miles) above the Earth. While this sounds like a long distance, the speed of light is so large that the pulse returns to the satellite receiver in just a few milliseconds. Therefore, the electronics must not only record data at a high data rate, they must also be durable in a space environment for years if not decades in order to exclude drift as a source of measurement error. It turns out that just such an error was identified in the TOPEX/Poseidon mission (T/P), and this will be discussed shortly. On Earth, one might simply replace the electronic components if a drift were seen. However, one of the difficulties of a space environment is that it is virtually impossible to make any such change in hardware after launch.

Another complication is discussed by the Jason-3 Handbook (p. 18) describes (https://www.avl.class.noaa.gov/release/data_available/jason/j3_user_handbook.pdf ):

“A satellite orbit slowly decays due to air drag, and has long-period variability because of the inhomogeneous gravity field of Earth, solar radiation pressure, and smaller forces. Periodic maneuvers are required to keep the satellite in its orbit. The frequency of maneuvers depends primarily on the solar flux as it affects the Earth's atmosphere, and there are expected to be one maneuver (or series of maneuvers) every 40 to 200 days.”

Therefore, yet another measurement is required in order to know the precise position of the satellite at all times. In particular, the altitude of the satellite must be known, and the precision of the measurement is important. According to EUMETSAT, “the GPSP (Global Positioning System Payload) (supplied by the US) uses the Global Positioning System (GPS) to determine the satellite's position by triangulation. At least three GPS satellites are needed to establish the satellite's exact position at a given instant. Positional data are then integrated into an orbit determination model to continuously track the satellite's trajectory.”

The phrase “exact position” might look reassuring to a novice, but any seasoned draftsmen or machinists (or engineer or scientist) will immediately cringe at such a description (with good reason, as will be discussed below).

In addition to the GPS, there are ground-based systems which may be used to track position. According to the same EUMETSAT website:

“DORIS (supplied by Europe) uses a ground network of 60 orbitography beacons around the globe, which send signals, in two frequencies, to a receiver on the satellite. The relative motion of the satellite generates a shift in the signal's frequency (called the Doppler shift) that is measured to derive the satellite's velocity. These data are then assimilated into an orbit determination models to keep permanent track of the satellite's precise position (to within three centimeters) in its orbit. Following lessons learned from Jason-2 improvements have been made to albedo and infrared pressure, ITRF 2008, pole prediction, Hill along-track empirical acceleration and on-board USO frequency prediction.”

The statement regarding “lessons learned,” with bold font added here, is curious, is it not? I will return to that issue momentarily.

***

A resolution of 3 centimeters may be impressive for many satellite applications, but one must bear in mind that an often-cited value for “Global Mean Sea Level change” is 3 mm per year. If global mean sea level changed by 3 mm per year, it would take ten years for the DORIS system to resolve any difference outside of its stated error band. As demonstrated above, a measurement of the acceleration (or deceleration) of (alleged) sea-level rise requires even more precision.

Another ground-based system is used to measure the satellite’s velocity. The Jason-3 satellite is also equipped with a laser retroreflector array (LRA), which is “an array of mirrors that provide a target for laser tracking measurements from the ground. By analysing the round-trip time of the laser beam, we can locate where the satellite is in its orbit and calibrate altimetric measurements.”

Take notice that the LRA or DORIS are ground-based, so their measurements are subject to the same bias present in tide gauges. The elevation of each system may vary over years or decades by several millimeters, maybe more. Are the data from these two systems adjusted using the same approaches applied to the tide gauges as described (above) by Dangendorf et al.?

***

Recall the media report statement, “With these two papers, the case is becoming clearer that climate change research is constantly being refined and corrected, and as that happens, the picture becomes increasingly dire.”

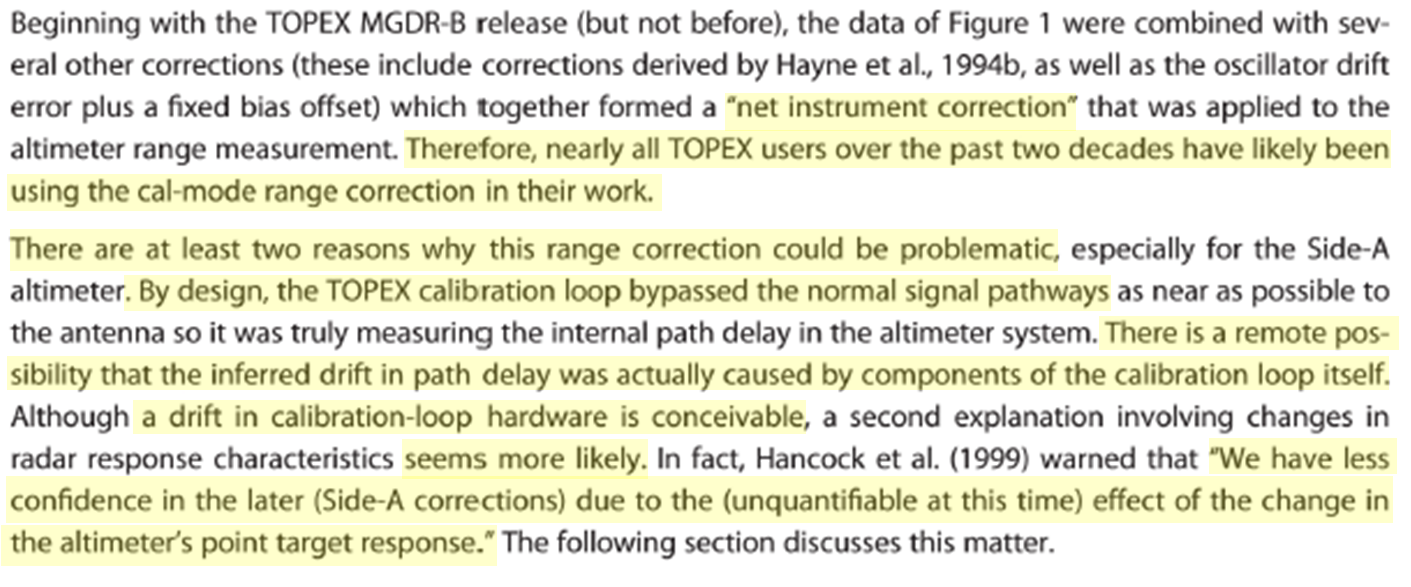

One such “correction” was explored by the same corresponding author (B.D. Beckley) in the cited work:

BD Beckley, PS Callahan, DW Hancock, GT Mitchum, RD Ray, “On the ‘Cal-Mode’ correction to TOPEX satellite altimetry and its effect on the global mean sea level time series.” J Geophys Res Oceans 122, 8371–8384 (2017).

The introductory two sentences are instructive, as shown in an inset and emphasized here.

“The TOPEX/Poseidon (T/P, Jason-1. Jason-2., and Jason-3 satellite altimeter missions have provided a continuous and near-global time series of sea level measurements, now extending over 24 years. Among the myriad applications of this time series, the determination of global mean sea level with a precision necessary to monitor sub-centimeter change is probably the most difficult.”

The introduction goes on to point out that, “In several cases, the tide gauge validation now in place have indeed uncovered spurious drifts in the (satellite) altimeter measurements,” and cites an examples from the literature.

An entire section of the paper is devoted to a myriad of errors and bias corrections applied to the satellite altimetry data. The figure below describes a calibration mode, and includes only a portion of the description of factors which may influence the data conditioning.

The full article includes a more-in-depth description. Without delving into the details, one may note, upon careful reading that there is no discussion of possible bias or precision corrections due to “albedo and infrared pressure,” as mentioned by the EUMETSAT website as being a source of “lessons learned.”

The data conditioning which was applied requires judgment, and so three different approaches to them are given as “are described as “(1) MEaSURE v.3.2, (2) cal_1 unapplied, and (3) ToPex retracked.”

Let us skip ahead in this paper to their figure (“Figure 5”), given below, which shows the results of the satellite altimetry with these different data conditioning techniques. The first observation one might make is that each of three data sets indicated variability. In other words, the actual data lines appear rather “jagged” over the entire time frame. In the figure below, three curve fits are presented with dashed lines. It would seem that the graph on the WEF website corresponds to a smoothed representation of the actual data shown here.

Clearly, a smoothing function could also have been applied to the WEF representation of tide gauge data as well, but this does not appear to be the case. It seems likely that the WEF is overstating the precision of the satellite altimetry results, compared to the tide gauge data.

Now, let’s take a look at the three curve fits from the Beckley et al. paper. The dark blue line starts at the lowest vale for a relative sea level while the red line represents data with a specific calibration applied. The red line was computed from data using a different approach to the calibration applied. The cyan (light blue) line is computed with yet another correction applied to the data. The article includes technical details which seek to justify their values represented for the red line (“cal_1 unapplied”) and refers the reader to details of a different article for the data represented by the cyan line (“ToPex retracked”). However, the authors point out that the data was for solid blue line (“MEaSUREs v3.2”) had been used by scientists in the field for the previous 20 years.

The authors do provide some justification for their approach but admit the possibility that the measurement itself may be correct, and that the error may have been due to “a drift in calibration-loop hardware”. Their approach for providing revised data “seems more likely.” One of the chief difficulties with satellite altimetry is that the instrumentation cannot be wholly evaluated to determine error sources after launch, so one is left with conjecture about the cause of problems. In this instance, the authors opine that the drift in the electronics used for data acquisition seems more likely to be the source of bias, as opposed to the calibration electronics which is also onboard the satellite.

One can see the difference in the outcome for the three data sets very readily by examining the authors’ “Table 2,” also given below. The first row, labeled “MEaSUREs,” corresponds to the dark blue line. The column labeled “Linear (mm/yr)” is broken into two time frames (1993-2002 and 1993 -2016) in much the same way that our discussion above pursued. For this row, it can be seen that the mean value of the reported linear increase actually decreases from 3.52 to 3.41 mm/yr when the second portion of the time series is taken into account. Taken by itself, this would correspond to a deceleration in sea-level rate. In the rightmost column, the data is fit using a second-order polynomial. Although the mean value of +0.016 would suggest an increasing sea level, the error bars disclose the possibility of a decreasing sea level over the long term since: 0.016 – 0.023 = - 0.07 mm2/yr, and this value is below zero.

Could it be that the observation that a negative value for the time-squared term is within the error bands motivated the lengthy reconsideration of the data presented in Beckley et al.? After all, if sea levels were projected either to “increase, decrease, or stay the same” depending on where the true data lie within the uncertainty range, the support for studying the effect using satellite altimetry might have been cut. After all, wouldn’t we have been able to make such a statement prior to the initiation of the study?

Indeed, the authors propose that the calibration mode was incorrect and that the subsequent data conditioning leads to not only a rising sea level, but also an increase in the rate of sea level rise, as indicated by the second and third rows.

One wonders, given the signs of unconscious bias in the NASA website described above, whether these authors would have taken such lengths to investigate the source of an error in the opposite direction. A clue to the answer to this question is suggested by “Figure 6” of the same article, shown below.

Here the results are shown with polynomial fits for the bottom two rows in the table above. The authors used a fifth-order polynomial to fit one data set and a fourth-order polynomial to fit another. In each case, their curves project sea-levels increasing by 8 mm/yr by 2015. The authors are careful to point out that a high-order polynomial fit, emphasizing “We should probably stress the obvious point that a fitted polynomial is not a physical model, so it reveals nothing about rates after 2016, nor does it shed light on causative mechanisms.”

Perhaps someone ought to inform the authors at the WEF and many other large media outlets which have miserably failed to take their advice of this “obvious” point “stressed” by Beckley et al. Moreover, one should increase the degree of a polynomial fit according to the confidence one has in the data. A linear fit is the most conservative approach. Yet the authors showed little hesitation in presenting sea level rates in excess of double the mean from their fifth and fourth order curve fits, and the journal reviewers and editor failed to deter the authors from including it.

Thus, the climate of fear and deception is propagated.

***

It is reasonable and appropriate for a scientific researcher to challenge data which has been accepted for a long time. The analysis described in Beckley et al. was performed after data led them to question the mean values for sea level which had already been computed. In other words, their analysis, whether correct or incorrect, was pursued for data that had already been collected and analyzed “for two decades.”

But what if a new bias were discovered in the same data, wouldn’t also be appropriate to re-analyzed the same data? Likewise, if a new source of imprecision were found, wouldn’t it be appropriate to consider adjusting the accepted data by broadening the uncertainty range?

Would NASA pursue an effort on the scope of that described by Beckley et al. if an observed bias and/or imprecision source suggested that the magnitude of the uncertainty range portrayed in their results is too narrow? Specifically, what if a new analysis called into question whether the measurement is sufficiently accurate to measure the either sea-level rise or “acceleration” of sea-level rise?

Let us now return to the phrase, “Following lessons learned from Jason-2 improvements have be [sp.] made to albedo and infrared pressure…” in regard to satellite altimetry.

To understand what is meant by albedo and infrared pressure, one must first be familiar with the concept of “radiation pressure.” A Scientific American article from 1972, accessible at:

https://www.scientificamerican.com/article/the-pressure-of-laser-light/ is helpful in this regard, and an excerpt of its introduction is included here:

“The Pressure of Laser Light” by Arthur Ashkin on February 1, 1972

“It is common knowledge that light carries energy. Less obvious is the fact that light also carries momentum. When we sit in sunlight, we are quite conscious of the heat from the light but not of any push. Nonetheless, it is true, that whenever ordinary light strikes an object, the collision gives rise to a small force on the object. This force is called radiation pressure.

The possibility that light could exert pressure goes back to Johannes Kepler, who in 1619 postulated that the pressure of light is what causes comets' tails to always point away from the sun.”

The reason why radiation pressure acting on a comet would to lead to the appearance of a tail-shape can be explained if you think of a comet as being a grouping of objects of different sizes. (The picture below is a stock photo from Getty Images.)

Simplify the situation by considering two cubes of ice – one measuring 1 cm by 1 cm by 1cm. The second measuring 2 cm by 2 cm by 2 cm. For our example, let’s assume the density of ice for each cube is 1 gram per cubic centimeter. Since the mass of a cube is density times volume, the mass of the second cube is 2x2x2=8 times the mass of the first cube. For simplicity, let’s assume the flat face is directly pointed toward the sun for each cube, and let’s also assume the radiation pressure, in a nominal unit of force per centimeter-squared acting on the flat surface facing the sun is the same for both cubes. The force is pressure times area, so the force acting on the second cube is 2x2 = 4 units of force. This compares to 1 unit of force acting on the first cube.

Newton’s Second Law states that force equals mass times acceleration. Since the force acting the second cube is 4 times higher than that on cube 1 while its mass is 8 times higher than cube 1, the acceleration must be half that of the first cube. The source of the radiation pressure is the sun, so the acceleration of both cubes is away from the sun, but the smaller cube accelerates away from the main body, also called the nucleus, of the comet faster than the larger cube. Once this effect is scaled, a tail shape can be seen with larger pieces near the main object of the comet while the harder-to-see smaller objects accelerate faster away from the nucleus of the comet. Thus, the tail of the comet appears to fade away with distance.

In 1873, James Clerk Maxwell made a breakthrough in our understanding of radiation pressure by prediction its magnitude on his theory of electromagnetic waves. In fact, his work laid the Ludwig Boltzmann’s (picture) development of the theoretical basis of the T^4 Radiation Law.

A brief history of the development of the T^4 Law is given by Crepeau (https://www.researchgate.net/profile/John-Crepeau/publication/267650295_A_Brief_History_of_the_T$_Radiation_Law/links/54 ), who writes that:

“Dulong and Petit performed a series of experiments to determine the rate of radiation heat transfer between two bodies. Their paper, which was awarded a prize from the French Académie des Sciences in 1818 proposed a relation for the emissive power of radiation, E = μaT where a =1.077 and μ was a constant dependent on the material and size of the body. Even decades after the T4 law was discovered, a textbook stated that the Dulong and Petit law, ‘seems to apply with considerable accuracy through a much wider range of temperature differences than that of Newton,’ and is based on ‘one of the most elaborate series of experiments ever conducted’…. This was the state-of-the-art when Josef Stefan, Professor and Director of the Institute of Physics at the University of Vienna began his own investigation of the problem [in the 1860’s]….

“Stefan did not feel comfortable with the Dulong and Petit law, so he began to delve into their work more closely. Drawing on his past experience in conduction heat transfer, he reconstructed their apparatus. Based on the design, he estimated that a significant portion of the heat was lost by conduction and not by radiation, as had been presumed by Dulong and Petit. By subtracting out the heat lost by conduction and reanalyzing the data, Stefan saw that the heat transfer was proportional to the temperature to the fourth power.”

Notably, Josef Stefan’s observation that the lauded Dulong and Petit experiments were biased in a way which led to a wrong conclusion took place several decades after the initial data were collected. He then corrected the data and formulated a new empirical relationship between temperature and the emissive power of radiation.

Crepeau continues: “Boltzmann (see picture below) was born on February 20, 1844, just outside the medieval protective walls of Vienna, to a middle class family. His father, Ludwig Georg, was a civil servant of the Habsburg Empire, working in the taxation office…. In a deceptively simple analysis, Boltzmann considered a Carnot cycle, using radiation particles as the working fluid. He based his ideas on an earlier paper of Adolfo Bartoli who described some ideas on radiation pressure. Boltzmann combined thermodynamics and Maxwell’s electromagnetic equations with the then novel idea that electromagnetic waves exert a pressure on the walls of an enclosure filled with radiation.”

For those interested, Boltzmann’s complete, yet refreshingly brief, derivation is given by Crepeau, and an abbreviated version is provided with considerable aftermath of Boltzmann’s work in “Black-Body Theory and the Quantum Discontinuity, 1894-1912” by Thomas Kuhn. Kuhn is perhaps better known for his work “The Structure of Scientific Revolutions” wherein he contends that science is often dramatically upended by “paradigm shifts” rather than progressing through an accumulation of knowledge, which he denotes “normal science”.

The combination of Stefan’s careful discernment of bias in pre-existing, “award-winning” experiments and Boltzmann’s clever derivation led to the T^4 Law which states that radiative heat transfer rates away from an object is proportional to the object’s temperature raised to the fourth power. The constant of proportionality is denoted, appropriately, the Stefan-Boltzmann constant, and is usually given by the Greek letter sigma. One of the interesting facets of Kuhn’s work on the Black-Body Theory is his description of the depth of the relationship between Boltzmann and Max Planck, who viewed Boltzmann as a mentor, even when their opinions differed.

Boltzmann was also highly regarded as a lecturer, and one of his students was Lise Meitner, who stated in a short memoir:

“Boltzmann had no inhibitions whatever about showing his enthusiasm while he spoke, and this naturally carried his listeners along. He was also very fond of introducing remarks of an entirely personal character into his lectures-I particularly remember how, in describing the kinetic theory of gases, he told us how much difficulty and opposition he had encountered because he had been convinced of the real existence of atoms, and how he had been attacked from the philosophical side, without always understanding what the philosophers had against him.”

The story of Lise Meitner is one of the most remarkable in all of the history of science. She was among the first to understand that experiments carried out in her laboratory in (Nazi) Germany demonstrated nuclear fission. However, she and her family were Jewish, so she was compelled to escape Germany and went to Stockholm, Sweden. Many believe that her work deserved a Noble Prize for Chemistry, which her junior partners received instead. Later in her essay (available at https://www.aip.org/sites/default/files/Looking%20Back.pdf ), she describes her working group of scientists:

“There was really a very strong feeling of solidarity between us, built on mutual trust, which made it possible for the work to continue quite undisturbed even after 1933, although the staff was not entirely united in its political views. They were, however, all united in the desire not to let our personal and professional solidarity be disrupted. This was a special feature of our circle and I continued to experience it as such right up to the time left Germany. It was something quite exceptional in the political conditions of that day. In this way, from 1934 to 1938, Hahn and I were able to resume our joint work, the impetus for which had come from Fermi’s results in

bombarding heavy elements with neutrons. This work finally led Otto Hahn and Fritz Strassmann to the discovery of uranium fission. The first interpretation of this discovery came from O.R. Frisch and myself, and Frisch immediately demonstrated the great release of energy which followed from this radiation. But by then I was already in Stockholm.”

To clarify, Lise Meitner’s comment regarding “not entirely united on political views” can be inferred to mean that her peers were varied in their degree of acceptance of the policies of Adolf Hitler and the Third Reich. Those familiar with Lise Meitner’s very significant role in the discovery of nuclear fission may discern that her description exhibits an extraordinary degree of deference and humility, which is rare among scientists of her caliber. ( For more on her story, see this article by Dr. Timothy Jorgensen: https://theconversation.com/lise-meitner-the-forgotten-woman-of-nuclear-physics-who-deserved-a-nobel-prize-106220 which is also the source of her picture above).

*** “Three. That’s the magic number. Yes, it is. It’s the magic number.” –The Magic Number, De La Soul

In its simplest form, the radiation pressure depends on characteristics of two bodies – (1) the source of radiation and (2) the body upon which the radiation acts. Increasing either the temperature or the emissivity of the source will lead to an increase in radiation pressure. In addition, increasing the reflectivity of the body upon which the radiation pressure acts leads to a higher pressure acting upon it. In fact, radiation pressure acting upon a perfect reflecting surface is double what the pressure would be if the same body were a perfect absorber.

Satellite tracking and orbit prediction techniques typically attempt to account for radiation pressure due to the sun. This calculation is often a daunting task because surface orientation to the sun must be known for each surface on the satellite. In addition, the reflectivity of each surface would need to be known for each surface in order to calculate the pressure. In turn, integrating the pressure over all the surfaces determines the force acting on the satellite, analogous to our example using cubes in a comet tail. The force leads to acceleration which leads to a slightly change in the velocity and position of the satellite.

Material properties can sometimes change over time in a space environment, and if reflectivity changes, then the radiation pressure will also change. In addition, satellites may move in and out of shadow zones which occur when the Earth is between the sun and the satellite. So the precise calculation of the effect of radiation pressure on satellite orbits is challenging, to say the least, even when only two bodies, the sun and the satellite itself, are considered.

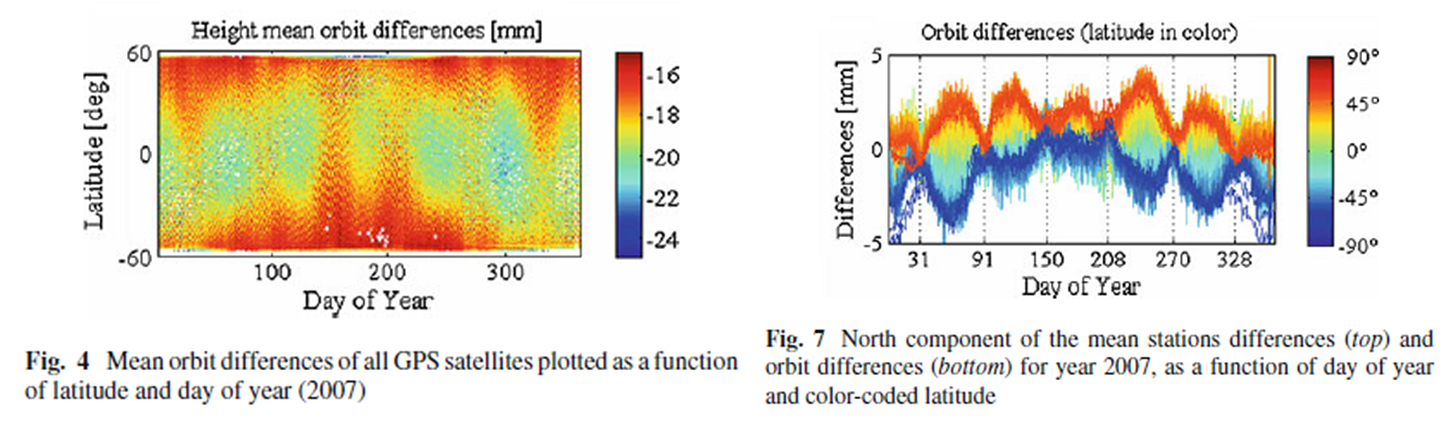

In 2012, an article by Rodriguez-Solano et al. published an article which demonstrated that a third object must be considered in order to determine the true location of satellites which serve as part of the Global Positioning System (GPS). The third object is the planet Earth. At the time of this writing, the article “Impact of Earth radiation pressure on GPS position estimates” is available at:

https://boris.unibe.ch/17848/1/Rodriguez-Solano2012_Article_ImpactOfEarthRadiationPressure.pdf (and is also available behind a pay wall at: https://link.springer.com/article/10.1007/s00190-011-0517-4 )

GPS satellites orbit at around 20,000 km, well above the 1380 km orbit of satellites used for satellite altimetry. Recall the statement ( https://www.eumetsat.int/jason-3-instruments - also described above), that “At least three GPS satellites are needed to establish the satellite's exact position at a given instant.” Rodriguez-Solano et al. point out that the GPS satellite locations themselves are not known to ‘exact’ precision:

“In previous studies, it has been shown that Earth radiation pressure has a non-negligible effect on the GPS orbits, mainly in the radial component…. It is shown that Earth radiation pressure leads to a change in the estimates of GPS ground station positions, which is systematic over large regions of the Earth. This observed ‘deformation’ of the Earth is towards North–South and with large scale patterns that repeat six times per GPS draconitic year (350 days), reaching a magnitude of up to 1 mm.”

The terminology “radial component” refers to the direction pointing away from the center of the Earth in this context. For satellite altimetry to provide accurate data, the value of the precise distance from the center of the Earth. Any imprecision in this measurement changes the reference point for altimetry in muc the same way the rise or fall of ground references affect tide gauge data.

The Earth leads to radiation pressure acting on a satellite in two ways. First the Earth emits radiation due to its temperature. Because its temperature is much lower than that of the sun, its emission has a much smaller effect. The second way in which Earth affects the radiation pressure is via the albedo (principally due to clouds) reflecting sunlight onto the surfaces of the satellite. The Rodriguez-Solano et al. article demonstrates that the magnitude of errors in estimated horizontal ground station positions depends on the time of year. In their “Figure 5” shown below, the difference between one day (“Day 61”) and a time 30 days later (“Day 91”) is different by about a millimeter, with some dependence on location around the Earth. In other words, the stationary ground stations are perceived by GPS satellites as moving sideways.

The authors describe it as follows:

“Earth radiation pressure mismodeling causes a very particular perturbation at the millimeter level in the daily position estimates of GPS ground tracking stations. Figure 5 shows horizontal displacement vectors between solutions with and without modeled Earth radiation pressure. This is shown for 2 days of 2007, one where the North orbit differences are large (day 61) and one where they are minimal (day 91).”

Although a difference of plus-or-minus 1 mm in the horizontal direction is problematic, the bias in the radial direction is an order of magnitude higher. Rodriguez-Solano et al. continue:

“The orbits of the two GPS satellites equipped with laser retro reflector arrays (LRA) show a consistent radial bias of up to several cm, when compared with the Satellite Laser Ranging (SLR) measurements, known as the GPS–SLR orbit anomaly. This bias was observed for the Center for Orbit Determination in Europe (CODE) final orbits with a magnitude of 3–4 cm by Urschl et al. (2007)….”

Their model which included an estimate of Earth radiation effects yielded results which approach those found in what researcher describe as “the GPS-SLR anomaly”:

“The most prominent effect of Earth radiation pressure on the GPS orbits is a radial offset of 1–2 cm, observable in the height component… The reason for this radial reduction of orbits is that GPS measurements, being essentially angular measurements due to required clock synchronization, mainly determine the mean motion of the satellite. As a matter of fact, a constant positive radial acceleration (equivalent to a reduction the product of the gravitational constant and the mass of the Earth) decreases the orbital radius according to Kepler’s third law.”

The bias is shown by Rodriguez-Solano et al. using two formats – one in their Figure 4 and one in their Figure 7, shown side-by-side below. Each figure shows that a model accounting for radiation pressure reduces leads to both (1) a bias toward a lower orbit height of about 2 centimeters and (2) cyclic behavior throughout the year of plus-or-minus about 3 mm, depending on day of the year and satellite latitude.

Moreover, the authors add a comment which implies that model Earth radiation effects would be more complex for an altimetry satellite with a much lower Earth orbit.

“For the Earth radiation, it was found that the use of an analytical model (based on constant albedo) or one based on numerical integration of Earth’s actual reflectivity and emissivity data, give similar results for the irradiance acting on the GPS satellites (difference of up to 10%), mainly due to the high altitude of these space vehicles.”

The model applied Rodriguez-Solano et al. assumed constant albedo for GPS satellites, but the day-to-day variation in cloud cover over the Earth could limit the accuracy of this simplification for predicting the orbit height of satellites used for altimetry and sea-level measurements.

Even when data collected from all GPS satellites are used to determine the location of the center of the Earth, the model used by Rodriguez-Solano et al. predict that Earth radiation pressure affects the results by over 1 mm, with variation from day-to-day, as they demonstrate in their Figure 9 (represented below).

If one considers that only a subset of the GPS satellites are used to locate altimetry satellites like Jason-2 and Jason-3, is it any wonder that EUMETSAT phrases “Following lessons learned from Jason-2 improvements have be [sp.?] made to albedo and infrared pressure…” Incidentally, the first time I read this sentence, I assumed the phrase ‘have be made’ was simply intended to mean ‘have been made,’ but it might instead have been intended to state ‘have to be made.’ Is this, perhaps, the product of a Freudian slip?

Given the statement by EUMETSAT regarding “lessons learned,” it is clear that Earth radiation pressure affected the satellite altimetry data collected by Jason-2 and earlier satellite altimetry missions to a degree that- at a minimum- increases the uncertainty range.

Has NASA re-analyzed the data presented in Beckley et al. to account for this millimeter-level day-to-day variation in Geocenter location due to Earth radiation pressure? Has the effect of Earth radiation pressure on the reference value for the radial position used as a point of reference for of the satellite altimetry data been adjusted using this new information? If so, has a revised uncertainty range been published? If not, why not? Given that the EUMETSAT website states that the GPS system provides the exact location of the satellites used for altimetry, perhaps we should go over that “lesson” once again as it seems not to have been “learned”.

***

To summarize:

1) The ocean surface height changes by 1000 millimeters or more due to wave height, weather patterns, and tides.

2) Data collected from tide gauges vary significantly over the Earth. These data are “conditioned” prior to presentation. Factors like earth elevation change for the ground references lead to bias and require some judgment in order to remove bias. The conditioned tide gauge data are used to calibrate satellite altimetry probes.

3) Satellite altimeters operate by sending an electromagnetic wave signal which bounces off the ocean’s surface back to the altimeter. The time difference leads to a measurement but this time delay can be affected by atmospheric conditions, so an additional sensor is used to condition the data with the nominal goal of eliminating this bias.

4) The electronics used to collect the data may change under time due to harsh space environment. After launch, performing diagnostic checks on signal processors is difficult, and this occasionally leads investigators to make subjective decisions which “seem more likely” during data conditioning.

5) Over time, satellite altimeters are affected by air drag, and therefore orbit height changes. Satellite maneuvers take place about every 60 days to maintain orbit.

6) Satellite location including altitude, is determined by the GPS satellite system. The EUMETSAT website states the location measurement is “exact.”

7) A model of Earth radiation pressure on the GPS satellite system leads to a bias in measuring the altitude of the altimetry satellite. If we use the values in Rodriguez-Solano et al., this bias would be about 20 mm when assessing the location of ground stations. The bias also varies with latitude.

8) According to Rodriguez-Solano et al., the value of the bias which fluctuates by about 5 mm over a 30-day period for GPS satellites. Thus, the character of the bias from GPS satellites is similar to that used for tide gauges in that the reference point is not fixed.

9) Even a simple model of Earth radiation pressure leads to a day-to-day variation of over 1 millimeter in Earth’s geocenter location when assessed by GPS satellites. The behavior of clouds, one source of albedo, is not taken into account in this model, and so the actual effect would be even more difficult to predict or ascertain.

10) Despite these sources of bias and uncertainty, the data collected by the satellite altimeters is said to show a rise in sea-level of about 3 millimeter per year.

11) Moreover, an analysis of the data is said to show an acceleration of the sea-level rise over the course of decades. The method used for this prediction is a polynomial curve fit. The curve fit was applied after “data conditioning” applied to data over 15 years old using assumptions which “seem more likely” since the calibration electronics cannot be fully tested on the satellite in orbit.

12) The authors who performed the polynomial curve fit state: “We should probably stress the obvious point that a polynomial fit is not a physical model, so it reveals nothing about rates after 2016, nor does it shed light on causative mechanisms.”

13) The abstract of the follow-on article with the same corresponding author states: “Simple extrapolation of the quadratic implies global mean sea level could rise 65 ± 12 cm by 2100 compared with 2005.”

14) Media reports lead by stating: “The Earth is changing in ways that could cause an actual mass extinction during our lifetimes. In recent years, scientists have made it abundantly clear that humans are driving climate change, but what they’ve only recently found out is how quickly we’re making the Earth more inhospitable.” Somehow, the report mysteriously blames Mount Pinatubo for the “data conditioning” applied to the altimetry data.

15) The World Economic Forum leverages NASA statements to its own ends, stating, “Sea levels reached a record high in 2021, and NASA says sea levels are rising at unprecedented rates in the past 2,500 years. The US space agency and other US government agencies warned this year that levels along the country’s coastlines could rise by another 25-30 centimetres (cm) by 2050.” Once more, this last statement includes the extrapolation of data based on a polynomial curve fit.